Background

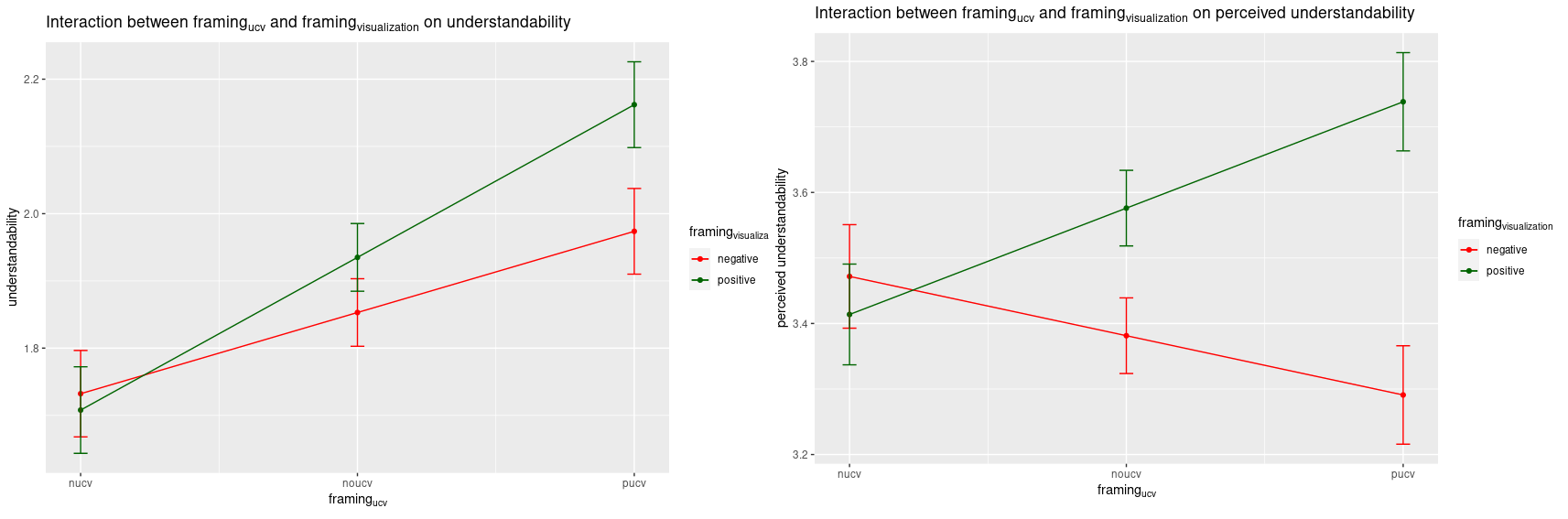

In XAI tools, we noticed that when explanations had multiple negations, they were quite difficult to understand. Although this was a new observation in XAI, linguistics literature shows that sentences with double negations are not very comprehensible. In fact, according to cognitive psychology theories, positive information is easier to mentally process. With the gap in XAI and these insights in other fields, we systematically investigated how framing influences understandability in XAI tools.

Research Questions

RQ1: How do feature explanations containing underlying continuous variables influence the ability to understand explanations?

RQ2: How does a framed visualization with feature explanations and it valence alignment with the final prediction, influence the ability to understand explanations?

Process

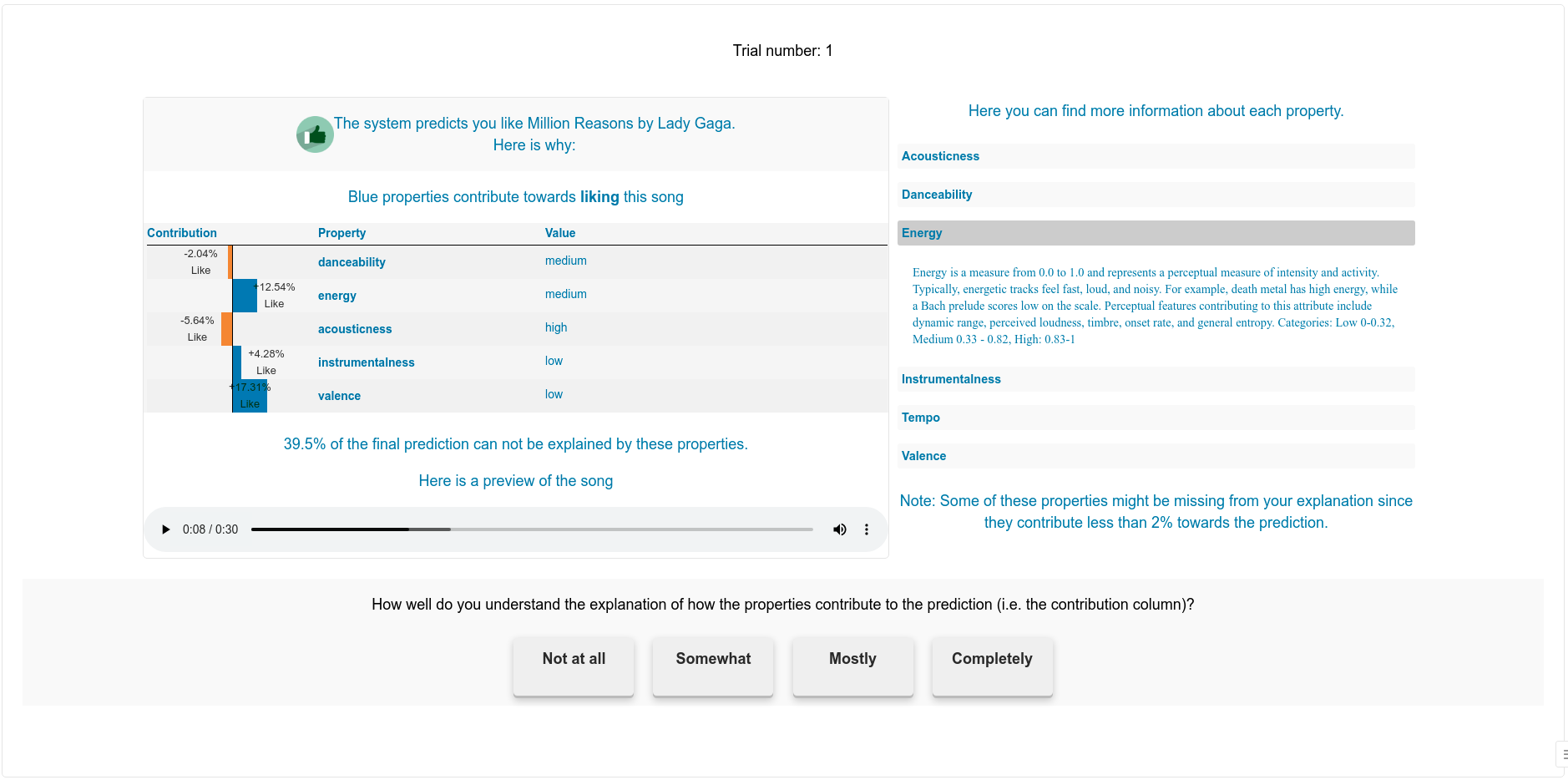

We started off by identifying the key variables and conditions related to framing that might influence user understanding (see image below). We wanted to test how 12 conditions (3x2x2) influenced user understanding. We did this for two application domains (music and loans) so we can identify if framing effects generalize across domains. Therefore, we trained an explainable music AI system and a loan decision support system in Python. The music AI system predicts and explains if participants like or dislike a song based on their favorite genre. The loan AI system predicts and explains loan eligibility or ineligibility for different user personas. With these systems, we created and conducted an online experiment. We initially did a pilot study to test if the user interaction and our user understanding questions could be improved. After the pilot, we deployed the main study and recruited participants through the university database. We determined the minimum number of participants through a power analysis.

Hypotheses

H1a: Feature explanations with underlying continuous variables will be easier to understand than explanations without underlying continuous variables.

H1b: Feature explanations with positive underlying continuous variables will be easier to understand than explanations with negative underlying continuous variables.

H2: Feature explanations with positively framed visualizations will be easier to understand than explanations with negatively framed visualizations.

H3: Feature explanations where the visualization framing and prediction are aligned will be easier to understand than explanations where the visualization framing and prediction are misaligned.

Our framing manipulations. We manipulated the UCV conditions (rows) between participants and the visualization (columns) within participants. For a negative prediction you have the same conditions. Therefore 12 conditions exist (6 for each predication class). pucv = positively framed UCVs; nucv = negatively framed UCVs